The tech: Sequencers

Where research meets the precision of code, unlocking an endless symphony of possibilities.

In the modular movement, a significant role was, and still is played by Celestia with their unique architecture by decoupling Data Availability (DA) and consensus from execution ever since the project initially launched as Lazy Ledger back in 2019. Over time, multiple bright minds in the space found out that it's possible to not only modularize DA, consensus, and execution, but other parts of the tech stack could be modularized as well. One of these components got some more buzz around it for a variety of reasons that we'll try to debunk in this article, and that's the sequencer. It may be very well that you've never heard about this particular piece of the tech stack, but its importance in a modular environment shouldn't be underestimated. Before we dive deeper into the concept of sequencers, let's take a look at rollups first.

Rollup mechanics

In a monolithic blockchain like Ethereum all these components are unified into one single blockchain:

Execution

This is the environment where application state changes are executed.

Settlement

The specific responsibilities can differ depending on the implementation, but typical tasks may involve validating or settling proofs and managing cross-chain asset transfers or arbitrary messaging.

Data Availability

Guaranteeing that the transaction data linked to rollup block headers has been publicly available and made accessible for anyone to reconstruct the state.

Consensus

This is the layer that agrees upon a set of predefined rules for the ordering of transactions, which are then accepted by validator nodes.

By separating these functions we can create a landscape in which rollups can dominate. There are for a variety of reasons to adopt a rollup-centric future such as solving the current scalability challenges and offering (full) autonomy as well. There are multiple ways to design a rollup such as smart contract rollups, sovereign rollups and validiums. Regardless of the exact implementation, a rollup generally consists of three main actors which are:

Light clients

Light clients only receive the block headers and do not download nor do they process any transaction data (else it would fulfill the tasks of the full node). Light clients can check state validity via fraud proofs, validity proofs or data availability sampling.

Full nodes

Rollup full nodes retrieve complete sets of rollup block headers and transaction data. They handle and authenticate all transactions to compute the rollup's state and ensure the validity of every transaction. If the full nodes were to find an incorrect transaction in a block the transaction would be rejected and omitted from the block.

Sequencers

A sequencer is a specific rollup node with the primary tasks of batching transactions and generating new blocks.

To give a brief overview on how rollups work:

Aggregation: Rollup nodes collect multiple transactions and create a compressed summary, known as a rollup block, which contains the essential information needed for transaction verification and state updates.

Verification: These rollup blocks are then submitted to the main blockchain, where validator nodes verify the validity of the transactions within the block and ensure that they comply with the predefined rules.

Once the block is validated, the state of the rollup is updated on-chain, reflecting the outcome of the transactions. This way, rollups achieve significant scalability improvements by reducing the computational load and data storage requirements on the main blockchain while still maintaining a secure and trustless environment for transaction processing. What effectively is being done here is that both the computation and state storage are moved off-chain but some data will be kept on-chain. Vitalik pointed out some compression tricks that can be applied such as (but not limited to) superior encoding, omitting the nonce, replacement of 20-byte addresses with an index and more.

Optimistic design or not?

To determine the validity of the state transition, two types of proof can be utilized: validity proofs for Zero Knowledge Rollups (ZKRs) and fraud proofs for Optimistic Rollups (OR). Of course, both of them have their particular pros and cons.

Optimistic Rollups

Optimistic rollups perform transactions off-chain and commit transaction data to the underlying base layer. Operators of optimistic rollups bundle numerous off-chain transactions together in sizable batches before posting them to the base layer. This process facilitates the distribution of fixed expenses across numerous transactions within each batch, thereby reducing charges for users. In conjunction with the batched transactions, multiple aforementioned compression techniques are applied to minimize the data posted to the base layer.

The term "optimistic" characterizing these rollups stems from their assumption that off-chain transactions are valid, resulting in the absence of proofs of validity for transaction batches uploaded on-chain. This distinguishing feature separates them from zero-knowledge rollups, which utilize cryptographic proofs of validity for off-chain transactions. Instead of cryptographic proofs, optimistic rollups depend on a mechanism for detecting fraudulent activity to identify instances where transactions are inaccurately computed.

Following the submission of a rollup batch, a challenging time period occurs during which anyone can contest the outcomes of a rollup transaction by generating a fraud proof. Upon the success of a fraud proof, the rollup protocol re-executes the transaction(s) and adjusts the rollup's state accordingly. Additionally, a successful fraud proof leads to the slashing of the sequencer’s stake, who included the erroneously executed transaction in a block.

Should the rollup batch remain unattested (meaning all transactions are correctly executed) after the challenge period concludes, it’s acknowledged as valid and incorporated on the base layer. However, others can proceed to build upon an unverified rollup block with a caveat: transaction outcomes may be overturned if they were based on a previously published inaccurately executed transaction.

Zero Knowledge Rollups

ZK-rollups aggregate transactions into batches that are processed off-chain, reducing the volume of data that needs to be uploaded to the blockchain. ZK-rollup sequencers consolidate the changes required to represent the entire batch of transactions into a summary, rather than transmitting each transaction separately. To verify that the state change occurred correctly, they generate validity proofs.

Zero Knowledge rollups, or zk-rollups, on the other hand, rely on validity proofs in the form of Zero Knowledge proofs (e.g., SNARKs or STARKs) instead of fraud-proofing mechanisms. Essentially, similar to Optimistic rollup systems, a sequencer collects transaction data from L2 and is responsible for submitting (and depending on the exact architecture, possibly for posting) the Zero Knowledge proof to L1. The sequencer’s stake can be slashed if they act maliciously, which incentivizes them to post valid blocks (or proofs of batches). The prover (or sequencer if combined into one role) generates unforgeable proofs of the execution of transactions, demonstrating that these new states and executions are correct.

Subsequently, the sequencer submits these proofs to the verifier contract on the Ethereum mainnet, along with transaction data or at least state differences. Technically, the responsibilities of sequencers and provers can be combined into one role. However, because proof generation and transaction ordering each require highly specialized skills to perform adequately, splitting these responsibilities prevents unnecessary centralization in a rollup’s design. In many cases, the sequencer, alongside the Zero Knowledge proof, only submits the changes in L2 state to L1, providing this data to the verifier smart contract on the Ethereum mainnet in the form of a verifiable hash.

Since ZK-rollups only need to provide validity proofs to finalize transactions, funds transferred from or to a ZK-rollup to the base layer incur no delays. Exit transactions are executed once the ZK-rollup contract confirms the validity proof.

Purpose of the rollup

Regardless of the mechanisms employed in the rollup design and the chosen proving system, a rollup is constructed with a specific purpose in mind and can be categorized into two types: general-purpose and application-specific rollups. An application-specific rollup offers the advantage of customization according to the developers' preferences. However, it may face limitations in terms of adoption. On the other hand, a general-purpose rollup can be shared among numerous applications, promoting composability.

This distinction prompts the question of why a relatively successful rollup, in terms of usage and revenue generation, would opt for decentralization. This decision could appear counterintuitive, as it may seem that the rollup could forfeit revenue generated by the rollup itself.

Current status of sequencers

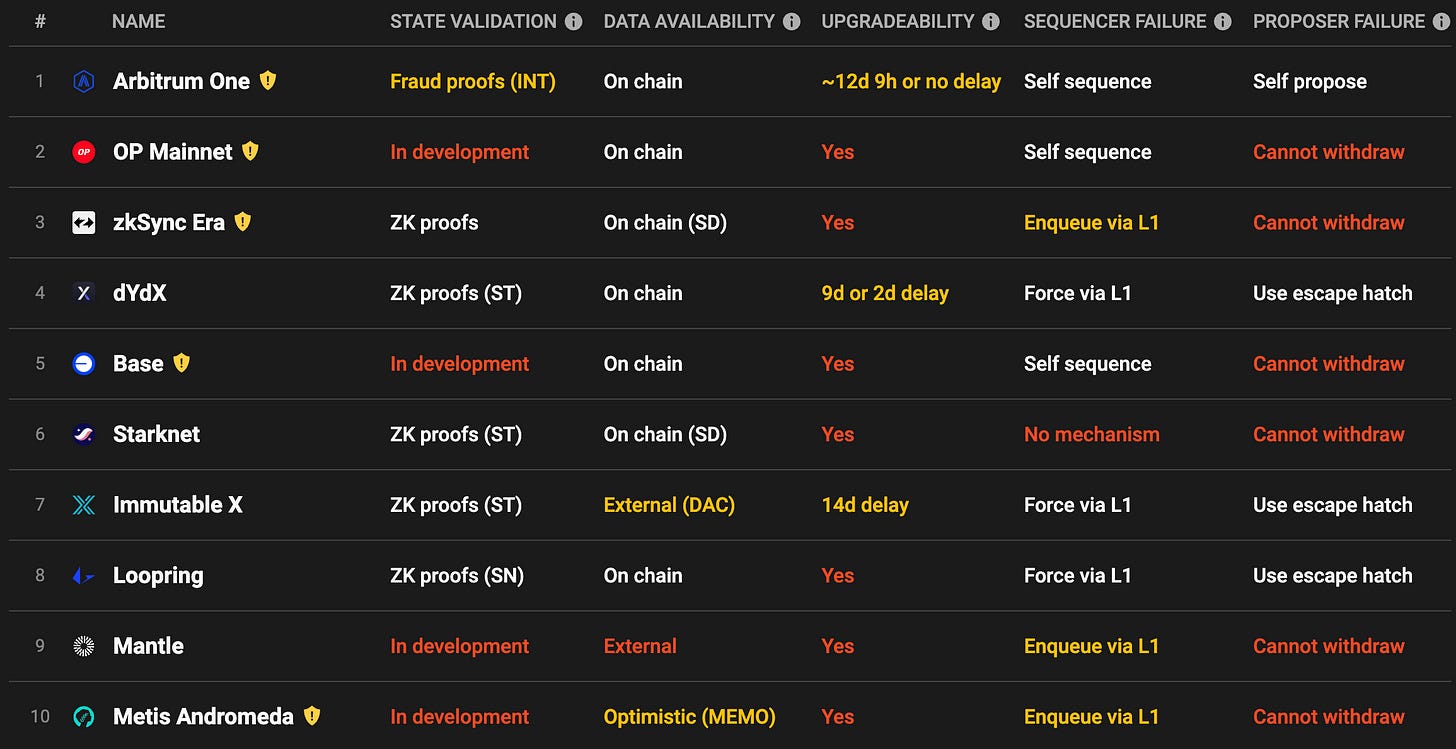

So now we know what the role is of sequencers within rollups, let's look at the current sequencer designs and their tradeoffs. L2beat offers an overview of the status of the sequencers of the most prominent L2s and as we can derive from the image, it doesn’t look all too well but changes and development are taking place as we speak.

Currently, most sequencers rely on a single centralized operator, often managed by the team responsible for the respective rollup. To be honest, Arbitrum cannot be blamed for continuously stating that they are “soon” about to decentralize their sequencer if you consider the revenue it generates for the rollup. As of the time of writing there’s over 5800 ETH reserved for the sequencer according to Arbiscan.

The interaction from a user perspective with a (de)centralized sequencer doesn’t really differ that much in terms of steps that need to be taken for the transaction to be executed and included in the block. But having a single centralized sequencer isn’t really desirable from a ideological point of view in conjunction with the fact that we’ll have to do additional trust assumptions which can lead to:

Single Point of Failure

Generally speaking there’s no viable alternative if the sequencer is offline due to a variety of potential issues

Monopolistic behavior

The sequencer is the one with the rights to obtain Maximal Extractable Value (MEV) profits

To maximize the profit the sequencer could artificially bloat the price that needs to be paid to get transactions included in the block.

Weak censorship resistance

There usually is not a mechanism to circumvent order exclusion or other dubious behavior.

No atomic composability

These ‘siloed’ setups need to have a cross-sequencer bridge to communicate with other rollups and won’t be atomic composable in any way.

Does this mean that there are no countermeasures that can be taken to prevent malicious behavior of a centralized sequencer? Even though suboptimal, an escape hatch could be implemented to prevent transactions being excluded in a block. Let's take a look at how Arbitrum tried to overcome this with their escape hatch since it’s the most dominant rollup in terms of TVL and usage as of now.

Arbitrum’s escape hatch

A user initiates a transaction on a dApp which will be directly submitted to the sequencer. Directly after receiving the transaction the sequencer will execute the transaction and in return give a soft confirmation to the user that the transaction has been successful. After approximately a second or so the transaction is included in the next batch which is sent to Ethereum via the addSequencerL2Batch function of the sequencerInbox. This is the case when everything is going smooth and as expected but what if it’s not going as expected?

The sequencer in this hypothetical case can fail for a plethora of reasons which leads to the failure of processing transactions. A user needs to create a L2 transaction in which he sends a transaction to the L1 node and automatically will be sent to the Inbox contract and will be seen as sendUnsignedTransaction and now will be recognized as delayedInboxAccs. If the transaction has been stalled here for a sufficient amount of time the forceInclusion method can be applied by anyone to move it from the delayedInboxAccs to the Inbox to be finalized. Assuming the sequencer is a bad actor in this case and censoring your transactions, it won’t be possible due to the fact that it can’t prevent your transaction from ending up in the Inbox even if it would spam transactions. When the Inbox is reached, the transaction ordering is finalized.

The sequencer landscape beyond Arbitrum

Like Arbitrum, Optimism operates a centralized sequencer that is run by the Optimism foundation. If the sequencer goes offline, users can still force transactions to the L2 network via Ethereum L1 though, providing users with a sort of safety net. However, Optimism plans to decentralize the sequencer in the future using a crypto-economic incentive model and governance mechanisms but the exact architecture is not agreed upon as of now. Actually a while ago a Request For Proposal (RFP) was put out by the Optimism foundation to enhance their sequencer design.

zkSync Era also has a centralized operator, serving as the network’s sequencer. Given the project's early stage, it even lacks a contingency plan for operator failure. Future plans include decentralizing the operators by establishing roles of validators & guardians though.

Finally, the second zkEVM rollup currently live on mainnet, Polygon, features a centralized sequencer operated by the foundation as well. If the sequencer fails, user funds are frozen, which means that users can’t access them until the sequencer is back online in the current implementation, however the functionality for an escape hatch exists in the code base but is not active. However, Polygon plans to decentralize sequencing in the context of the Polygon 2.0 roadmap.

Terminology

Before delving into the complexities of shared sequencing networks, it is essential to establish precise terminology. It's important to note that a decentralized sequencer doesn't necessarily indicate shared usage among multiple rollups. It is feasible to have a decentralized group of sequencers responsible for transaction sequencing, governed by a PoS-based consensus mechanism (details will be provided later), exclusively serving a single network. In the context of this article, we recognize the following design concepts:

Centralized Sequencer: In a centralized sequencer there usually is a single entity (or small set of entities) responsible for the transaction ordering and in this set of actors a user needs to put their faith in them behaving honestly. This centralized approach generally results in flexibility in case something needs to be adjusted but has its inherent limitations. it provides only restricted assurances regarding the resilience of pre-confirmations, presents the possibility of compelled exits that may not always be advantageous, and can occasionally result in suboptimal liveness.

Decentralized Sequencer: A decentralized sequencer operates within a distributed model in which entities with significant stakes (providing crypto economic security) participate in the system's operation. This distributed approach supports the resilience of the rollup when compared to a centralized sequencer setup. However, there are potential trade-offs to take into account. Depending on the architecture and implementation, there could be increased latency, particularly if multiple L2 nodes must cast votes before confirming a rollup block.

Shared Sequencer: A shared sequencer model can, in principle, be either centralized or decentralized. However, our focus will be on decentralized shared sequencers, which inherently bring the advantages associated with a decentralized sequencer while also offering interoperability benefits. This includes achieving a degree of atomicity and eliminating the need to establish an independent sequencer set. However, it's important to note that during the transitional phases leading up to complete L1 finality, this model may provide fewer robust assurances compared to direct L1 sequencing (as discussed in the "Based Sequencing" section).

About sequencers

A shared sequencer network can be defined as a rollup-agnostic collection of sequencers purpose-built to support numerous distinct rollups. This model encompasses a range of trade-offs and distinctive characteristics, which will be explored in the following section.

Censorship Resistance & Liveness

Permissionless blockchains promise to be resilient against censorship by a single entity. They also guarantee that submitted transactions will be included eventually, a property known as liveness. In other words, liveness is the guarantee that a protocol can exchange messages between the network nodes, allowing them to come to a consensus, finalizing a transaction over some time horizon.

Achieving the appropriate balance between censorship resistance and liveness presents a formidable challenge. For instance, consensus algorithms such as Tendermint provide assurance of recovery post-attack; however, they encounter a compromise in liveness during an attack. A structural requirement necessitating approval from all sequencers for the inclusion of a transaction within a block, rather than the designation of a distinct leader, may not always represent an optimal choice. Despite its promotion of censorship resistance, a single leader approach also introduces a trade-off concerning centralization and shifts the potential for MEV extraction to a single leader, which needs consideration alongside potential resource constraints on a single leader node.

Let us briefly dive into the inner workings of Tendermint. At its essence, Tendermint stands as both a consensus algorithm and a peer-to-peer networking protocol that enables a network of nodes to reach consensus on a sequence of values (or blocks) in a decentralized, fault-tolerant, and secure manner. Its application is widely recognized in various Proof of Stake (PoS) blockchain systems.

Tendermint operates in rounds, each of which comprises multiple steps. In each round, a "proposer" is designated with the responsibility of proposing the next block. Subsequently, the validators within the network engage in a multi-step voting process to reach agreement on the proposed block.

Tendermint is a deterministic consensus mechanism that boasts several crucial features:

Immediate Finality: Once a block is added to the blockchain, it is final. There are no forks or alternative histories in the network.

BFT (Byzantine Fault Tolerance): The protocol can tolerate up to 1/3 of the validators being malicious (Byzantine) and still reach consensus.

Safety and Liveness: Tendermint provides the assurance that, so long as over 2/3 of the validators operate honestly, the network will reach consensus on new blocks (ensuring liveness). Additionally, once consensus is achieved on a block, it will remain secure against reversal (ensuring safety).

The HotStuff2 consensus algorithm represents an interesting alternative, especially when the primary objective is the optimization of liveness. This algorithm has been engineered to uphold continuous liveness, even when confronted with adversarial circumstances. An important attribute of HotStuff2 lies in its capacity to circumvent the waiting period which is related to maximal network delays (timeouts) when transitioning to a new leader in cases where transactions are being censored or when there’s no signing happening at all. Such characteristics make HotStuff2 an exceptionally compelling choice for decentralized sequencer networks, where the paramount objective revolves around ensuring uninterrupted operation and fortitude against adversarial circumstances.

Let's take a closer look at HotStuff2 which is an iteration on Hotstuff, a Byzantine Fault Tolerant (BFT) consensus algorithm that was introduced in a 2018 research paper by Maofan Yin et al. HotStuff is particularly notable for its linear communication complexity and ability to maintain liveness under adversarial conditions.

HotStuff operates in a series of views (or rounds), where each view has a designated leader who is responsible for proposing a value. More specifically, HotStuff's consensus process involves three phases: Prepare, Pre-Commit, and Commit. Each phase requires participants (validators) to send and receive votes, also known as quorum certificates (QC). A supermajority (e.g., 2/3 or more) of votes is required to proceed from one phase to the next. A designated leader, which rotates over time, is responsible for proposing a value and coordinating the consensus process. Notably, HotStuff introduces a linear view change protocol, where validators only need to communicate with the new leader to switch views. This reduces communication overhead and ensures that view changes can occur quickly and efficiently, even if the previous leader was censoring or not signing messages (suffering outage or under attack).

Finally, Hotstuff2 is an iteration on HotStuff. It differs from Tendermint, as while Tendermint also has linear communication, unlike Hotstuff2, it is not responsive. If a sequence of honest leaders exists, the protocol is optimistically responsive because all steps (except the first leader's proposal) depend on obtaining a quorum of messages from the previous step. In a shared sequencer setting, this enables the protocol to achieve better liveness than Tendermint or Hotstuff, a key property shared sequencers need to provide rollups with.

Fundamental Components of Shared Sequencer Networks

A decentralized (and potentially shared) sequencer set is responsible for submitting transactions to the settlement layer. This set can be joined if certain requirements are met, and as long as the maximum number of participants isn’t reached. The upper bound of participants could, for example, serve to optimize for latency and throughput. In the case of Tendermint consensus, for example, the threshold is set relatively low for precisely these reasons. On the other hand, the requirements to join such a set are similar to those of becoming a validator on an L1 blockchain and may include hardware requirements or a minimal stake. This is particularly important if the sequencing layer aims to provide soft finality based on crypto-economic security guarantees.

Transaction processing happens with several components that work together. More specifically, the following elements:

JSON-RPCs: Facilitate transaction submissions to nodes acting as mempools before the transaction ordering process. Within the mempool, mechanisms to determine the queue and transaction selection processes are essential for efficient block building.

Block/Batch Builder Algorithm: Responsible for processing transactions in the queue and converting them into blocks or batches, this task may involve optional data compression techniques to minimize the resultant block's size (thereby reducing the calldata required and, hence, the cost of posting data to the base layer). The block-building mechanism should be separated from the proposer, similar to the approach used in Proposer Builder Separation (PBS). Distinct roles for builders and proposers ensure consistent and fair operation while mitigating the risk of MEV exploitation. Separating execution from ordering is crucial for optimizing efficiency and enhancing censorship resistance.

Peer-to-Peer (P2P) Layer: This layer facilitates receiving transactions from other sequencers and gossip blocks among the network post-construction, ensuring efficient operation across multiple rollups.

Leader Rotation Algorithm: If there is a simple leader rotation mechanism, there is effectively no need for consensus, as a single leader is responsible for sequencing each round. However this comes with censorship & MEV exploitation risks and hence might not be ideal. It does however improve speed & latency.

Consensus Algorithm: As previously discussed, consensus algorithms like Tendermint or HotStuff2 (potentially leader-based for improved latency) can be implemented to ensure that participating nodes are in agreement with the proposed ordering of transactions. Alternatively, a consensus algorithm could be used to determine the leader.

RPC Client: Vital for block/batch submission to the underlying DA layer & consensus layer, ensuring the block is securely posted to the DA layer, which guarantees ultimate finality and on-chain data availability.

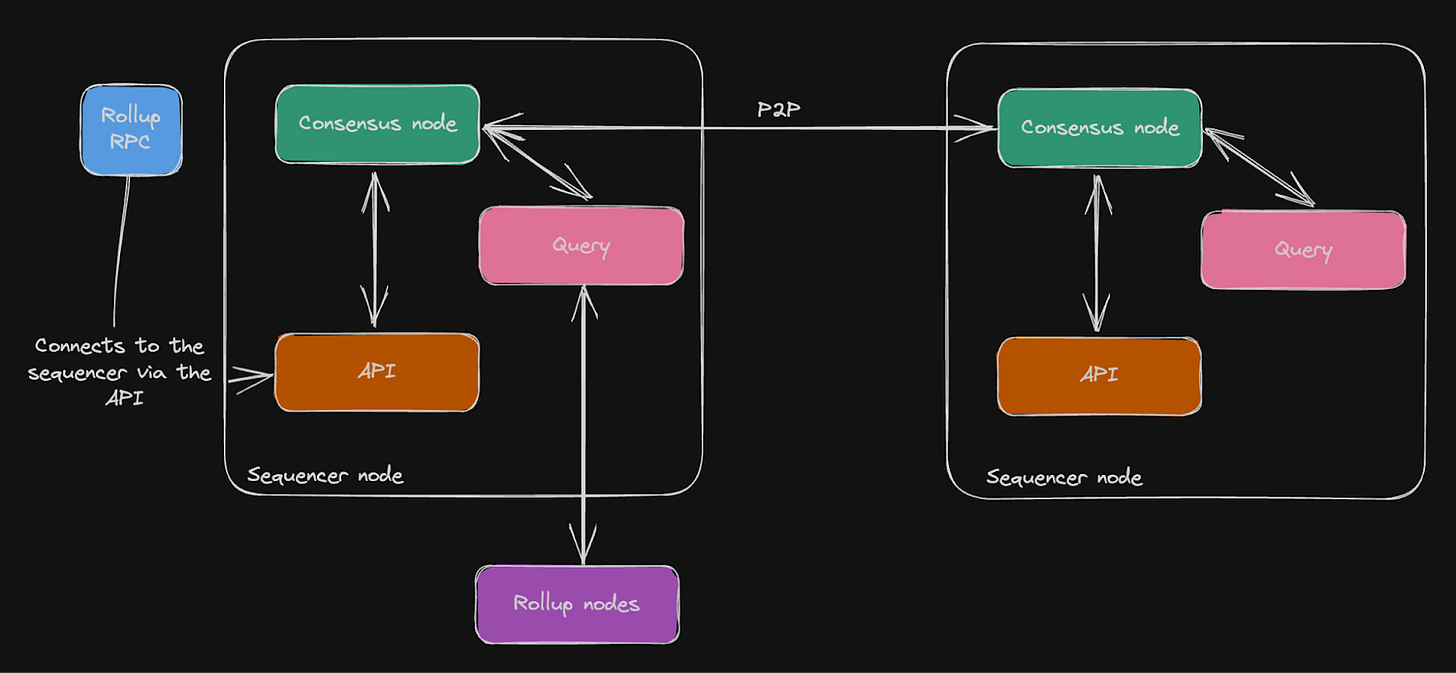

The illustration below integrates these components and provides a high-level visualization of what occurs in a shared sequencer network. Transactions are submitted to the shared mempool through JSON-RPCs, after which they undergo the block-building algorithm, ideally in a PBS-style setting. We will explore later how MEV-aware block-building networks like SUAVE can assume this function. Once relayed to the set of proposers (the shared sequencer set), the block is proposed to the DA layer, based on a consensus algorithm or leader rotation mechanism, and transmitted to the rollup nodes for soft confirmation, leading to a preliminary state update (as discussed in the next section). Finally, the rollup nodes receive hard finality from the DA layer once the blocks have been validated through L1 consensus.

The visualization below zooms into the shared sequencer set, visualized as the green squares on the right side of the above illustration. It shows how the P2P network serves as the communication layer in the context of the consensus algorithm employed by the shared sequencer network. As can be seen on the image, the rollup RPC connects to the sequencer API for transactions to be ordered by the sequencer. Once consensus has been reached amongst all the sequencer nodes the ordered transactions will be able to be retrieved by the designated rollups.

Soft Finality Guarantees

To provide users with the expected user experience when interacting with an L2, the rollup nodes receive the ordered block (which is also sent to the DA layer) directly from the sequencer and in return get a soft finality guarantee (often called a soft commitment). This block is ordered but has not yet been finalized through L1 consensus. This can provide the rollup with a soft finality guarantee, essentially promising that the block will eventually be posted as is on the DA layer. The DA layer, which relies on consensus mechanisms (e.g., Ethereum for a rollup relying on Ethereum for DA), ensures transaction integrity for the rollup and its users.

Subsequently, these rollup nodes execute the transactions and commit to a state transition function that is added to the canonical rollup chain. Notably, rollups maintain their sovereignty when using a shared sequencer. Thanks to the complete storage of all transaction data within the base layer on which they reside, they can fork away from a shared sequencer at any time. The state root from the State Transition Function (STF) on the rollup side is calculated from the transaction roots (inputs) and posted to the DA layer by the shared sequencers.

Benefits of Shared Sequencers

Shared sequencers offer several benefits over the current, centralized design used by all major rollups:

A modular plug & play solution: The secure decentralization of sequencers in an isolated setup can be challenging. Shared sequencers offer a more streamlined solution, enabling entities to seamlessly integrate with an existing sequencer, thereby eliminating the challenges associated with bootstrapping and maintaining an entire validator set. This "plug-and-play" model promotes a modular approach where transaction ordering is isolated into a distinct layer. Within this paradigm, shared sequencers can essentially be seen as a fast and convenient way to integrate middleware technology while reducing the time being spent on it,

Pooled security and enhanced decentralization: Shared sequencers advocate for a unified sequencing layer that consolidates robust economic security. Rather than dispersing numerous smaller committees across individual rollups, this approach aggregates the advantages of security and decentralization across a multitude of rollups.

Fast (soft) finality: Just as standalone rollup sequencers promise swift transactions, it's important to note that shared sequencers also deliver these ultra-fast pre-confirmations (see soft finality guarantees).

Decentralization as a Service: A defining feature of shared sequencers is their ability to separate transaction ordering from execution. This means that the block-producing nodes within such sequencers do not need to execute transactions or store the state of any dependent rollup. The system remains scalable and agnostic to the number of distinct rollups utilizing its capabilities. This enables shared sequencers to offer decentralization as a service to numerous rollups. As a result, these rollups enjoy the benefits of censorship resistance that a decentralized network typically offers, without the need to independently establish such a network. This setup grants rollups the freedom to define their own state transition function, opening the door to specialized performance attributes that cater to a wide range of use cases.

Upholding rollup sovereignty: As previously hinted, rollups that adopt a shared sequencer approach retain their sovereignty. Rollups can effortlessly harness the benefits offered by shared sequencers without concerns about entanglement or lock-in. Since transaction data is stored on a foundational layer, and rollup full nodes retain the state and oversee execution, shared sequencers are inherently designed to prevent undue control over the rollup. Transitioning from one sequencing layer to another is straightforward, requiring only a software update on the rollup node to opt for a different (shared) sequencer solution.

Facilitating cross-rollup composability: The fact that shared sequencers manage transaction ordering for multiple rollups, effectively grouping them into a single block, empowers them to construct atomic transaction bundles. In the case of validity rollups, there is research going on to allow users to conditionally specify that a transaction in one rollup should only be processed if a corresponding transaction in another rollup is concurrently processed. However, that will likely only be possible by imposing rather strict state coupling at the rollup (not sequencer) layer. These conditional transaction inclusion capabilities could however enable innovative functionalities. Nonetheless, there are limitations to this approach, as while there are soft guarantees from the sequencer, there is no hard guarantee that transactions on another rollup are actually executed, which is especially problematic in the optimistic case. An intriguing approach to address this issue, primarily tailored to optimistic rollups that are not inherently asynchronously composable, is shared validity sequencing (covered later in this report).

Another significant advantage of this shared layer is its capacity to consolidate aspects such as the committee and economic security across multiple rollups, potentially enhancing overall strength when compared to individual rollup committees in a decentralized but isolated setup.

Both shared and decentralized sequencer models can be designed in various ways but are susceptible to DoS (Denial of Service) attacks. Therefore, it is crucial to establish economic barriers for joining the sequencer set and submitting transactions to the sequencer layer. These barriers are essential for maintaining the integrity of the syncing layer and protecting it from DoS attacks.

Economic barriers apply to both bounded (limited) and unbounded (unlimited) participants in the sequencer network and serve to prevent abuse of the DA layer. In unbounded sets, the bond should cover additional expenses to defend against spam on the synchronization layer. In bounded sets, bond requirements are determined by the cost/revenue equilibrium.

Provable finality presents challenges for unbounded sequencer sets due to uncertainty about active voters/signers, whereas bounded sets achieve finality through a majority of sequencers signing off. This necessitates syncing layer awareness, introducing some overhead.

Bounded sequencer sets optimize performance but may sacrifice decentralization and censorship resistance. However, they offer fast, deterministic provable finality.

These distinctions are reminiscent of patterns in legacy blockchains, such as Ethereum's Proof of Stake (PoS) and CometBFT chains. Economic considerations may make unbounded and bounded sets functionally similar. According to 0xRainandCoffee, the difference in architectural designs can be viewed as a reflection of design philosophy, but in practice, the outcomes are relatively similar. This aspect is elaborated on in more detail in his comprehensive article, and we strongly recommend reading his publication.

In summary, all rollup models eventually achieve full L1 security upon the finalization of L1. The primary objective of most sequencer designs is to provide enhanced features, even if it means compromising slightly on assurances during the interim phase before realizing the comprehensive safety and security associated with L1 settlement. Each model entails its own set of trade-offs, especially regarding functionality vs. trust. The simplified visualization below provides an overview of this concept:

Shared Sequencing Solutions

Espresso

The Espresso sequencer consists of five main components:

HotShot: This consensus protocol is derived from the HotStuff consensus mechanism. It provides fast finality in optimistic scenarios while ensuring liveness and safety during pessimistic scenarios. It's optimized for an unbounded and permissionless group of validators. Notable features include a pacemaker for view synchronization, a Verifiable Delayed Function (VDF) based random beacon for leader rotation, a dynamic stake table, and distributed signature aggregation. The HotShot consensus mechanism requires a ⅔ majority for secure usage, and once transactions are finalized, they become irreversible.

Espresso DA: The Espresso DA layer offers two distinct paths for data retrievability. The first path is optimistic and fast, while the second is a more reliable but slower backup path designed for adversarial conditions. The DA layer generates blocks that can reference HotShot block proposals instead of the original block data.

Rollup REST API: This API is used by rollups to seamlessly integrate with the Espresso Sequencer.

Sequencer Contract: The sequencer contract is a smart contract that validates the HotShot consensus. It can function as a light client and manages transaction order checkpoints. Additionally, it oversees the stake table for the HotShot protocol.

Networking Layer: This layer facilitates communication between nodes participating in both the DA layer and the HotShot consensus.

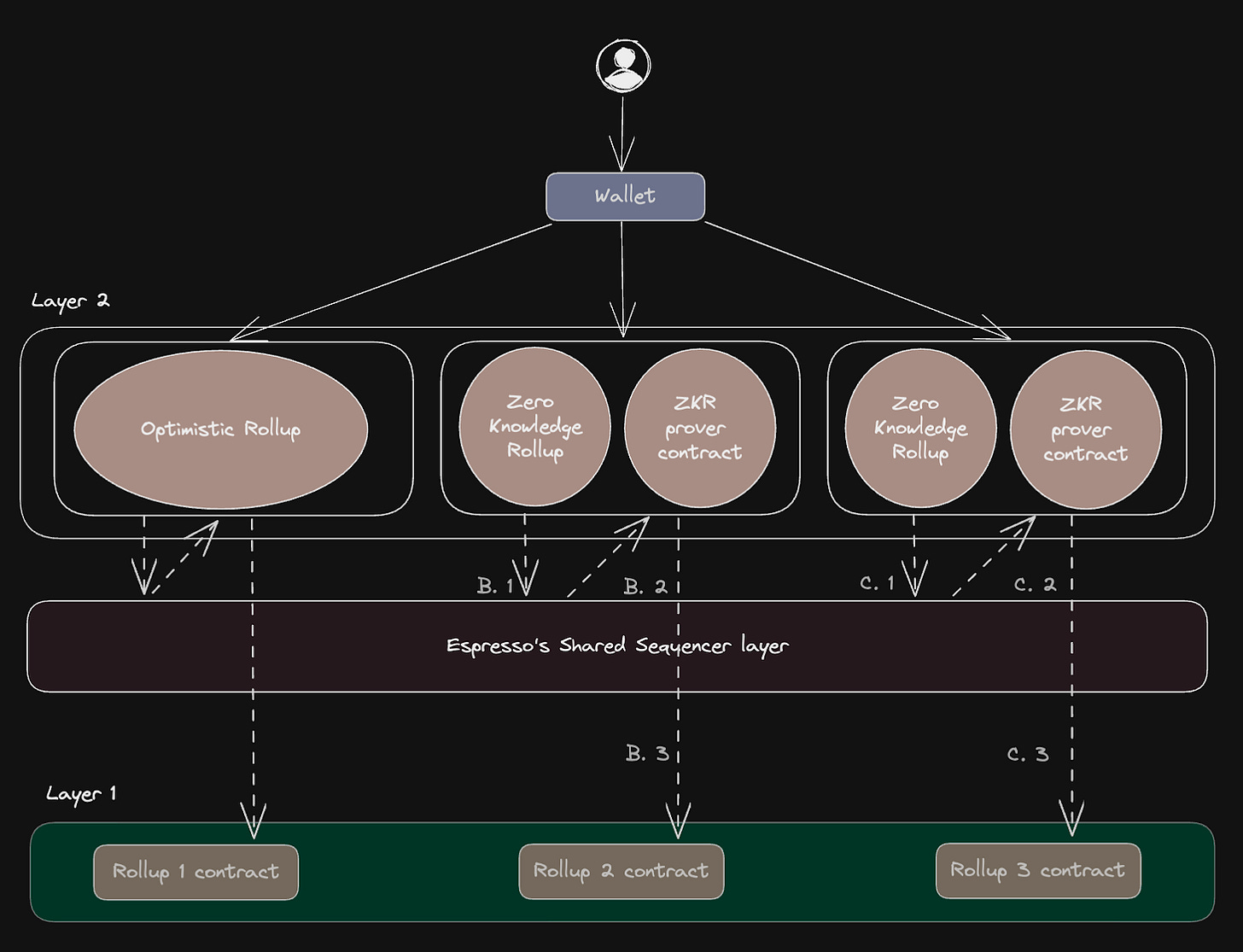

When a user's transaction is sent to the rollup, it undergoes transaction verification on the rollup using either a ZK or optimistic scheme. ZK-based verification requires an additional prover contract, while optimistic rollups have a window for fraud proofs. Transactions received from the rollups are forwarded to Espresso's sequencer network, where they are broadcasted to other nodes for consensus. Sequencer-submitted transactions must be linked to a rollup-specific identifier for validation. These transactions are then aggregated under rollup-specific identifiers using namespace Merkle Trees, with the commitment being verified and stored on the underlying DA layer. It's important to note that HotShot itself doesn't include rollup-specific logic; the sequencer network's role is limited to ordering transactions, while transaction execution, including the exclusion of invalid transactions, is managed by the execution layer.

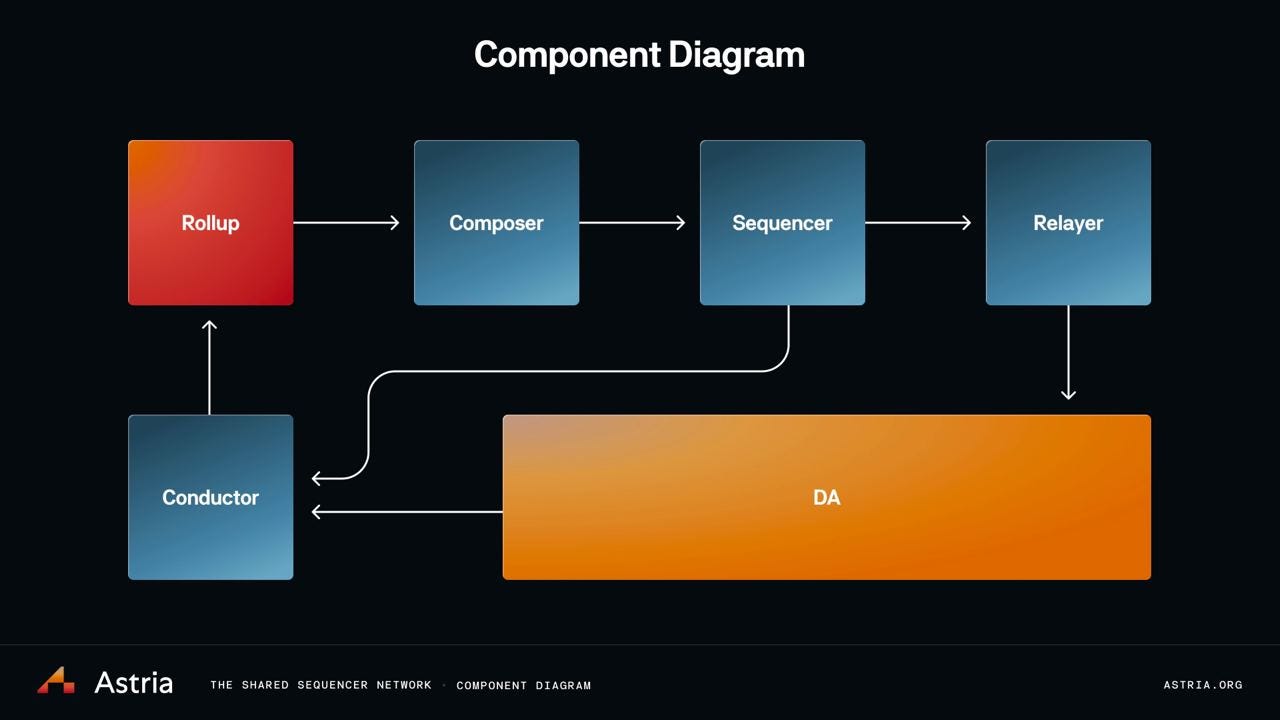

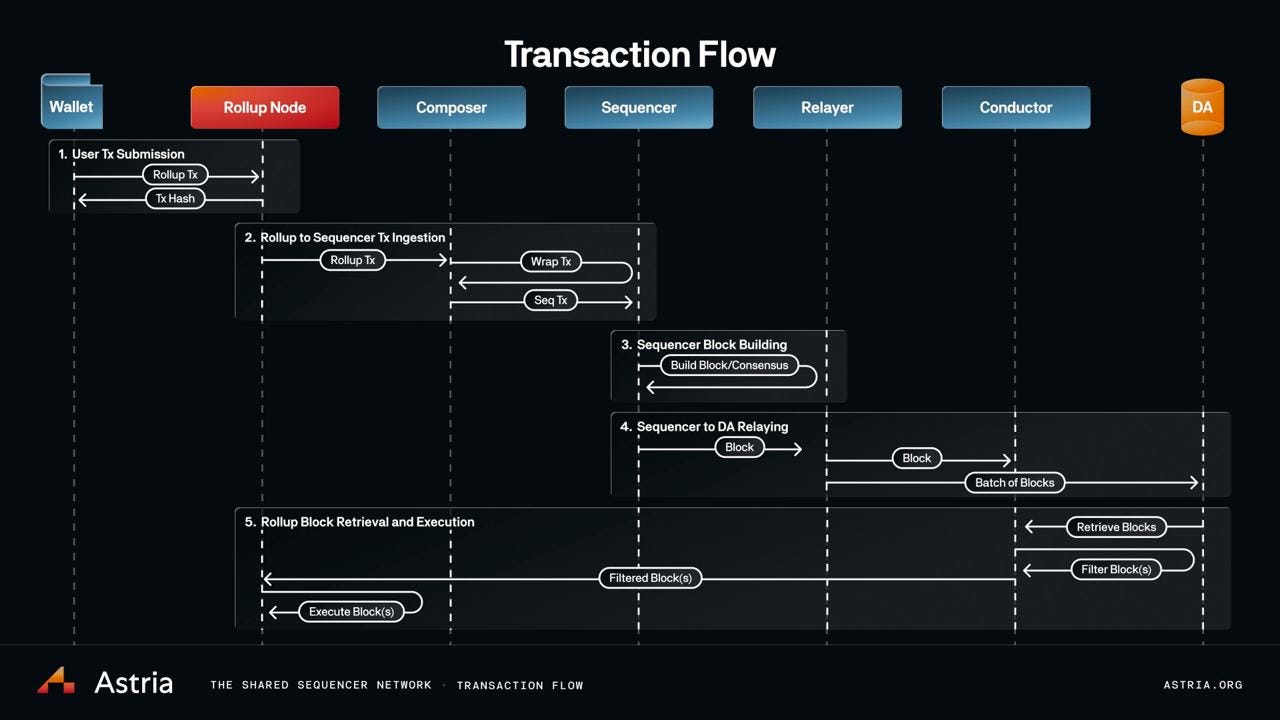

Astria

Astria is a decentralized, shared sequencer network that aims to overcome the limitations of traditional, centralized sequencers (as outlined earlier in this report).

To address scalability and latency issues by just ordering the transactions, Astria relies on a Tendermint-based leader rotation mechanism that ensures liveness and mitigates the risk of a single point of failure through a decentralized network of sequencers.

Astria also provides support for soft finality guarantees, enabling fast finality on the rollup execution layers integrated into Astria's shared sequencing layer. Instead of generating distinct state roots for individual blocks, Astria's sequencer architecture is designed to aggregate transactions from multiple rollups. These transactions are sequentially ordered into cohesive blocks and then posted to the underlying DA layer, effectively separating transaction ordering from execution. This decoupling allows Astria to accommodate a wide range of rollups with different state transition functions, making it rollup-agnostic. Furthermore, while Astria's atomic inclusion capability is still in its early stages, it holds significant potential in areas such as bridging and cross-chain MEV.

As mentioned before, the decoupling of execution from sequencing also ensures that rollups can seamlessly modify or change the sequencer set, fostering competition in the sequencer MEV space while insulating rollups from potential sequencer-induced vulnerabilities.s a decentralized and shared sequencing layer with the dual goals of eliminating harmful MEV and censorship risks, while also creating economic value for rollups.

Similar to Astria, Radius helps rollups achieve their maximum scalability potential with a single sequencer. To mitigate the risk of a potential single point of failure, Radius maintains a distributed network of sequencers to ensure security and liveness for the rollup. Under this model, multiple sequencers operate simultaneously, ensuring that even if a sequencer fails, there are remaining sequencers that can continue to operate. This eliminates the need for consensus mechanisms, which often introduce performance tradeoffs compared to single sequencers. Hence Radius takes an approach where the sequencer is changed secretly each round, ensuring that attackers are unable to learn anything about the next sequencer in line.

To achieve MEV-resistance, which is undoubtedly a standout feature, Radius employs a mechanism called Practical Verifiable Delay Encryption (PVDE), enabling an encrypted mempool. PVDE is a zero-knowledge cryptography scheme that plays a crucial role in ensuring the trustless sequencing of transactions. It is instrumental in preventing centralized sequencers from engaging in malicious actions such as frontrunning, sandwiching, or censoring user transactions.

This type of MEV-resistance is achieved by revealing the transaction contents only after the sequencer has determined their order. Specifically, Radius temporarily encrypts transactions based on a time-lock puzzle, introducing a time delay for the operator to discover the symmetric key required to decrypt the transaction data. This approach generates Zero-Knowledge Proofs (ZKP) proofs within 5 seconds, which are subsequently used to demonstrate that solving the time-lock puzzles will result in the correct decryption of valid transactions. However, the tradeoff here is that, compared to other shared sequencer networks that offer almost instant soft finality, Radius, while MEV-protected, may provide a slightly slower user experience (in the current form the time delay takes 5 seconds but improvements have been to reduce the proof generation to 1 second due to the switch to the Halo2 proving system.

Furthermore, similar to Astria, the Radius sequencer network plays a crucial role as a communication tool, facilitating the synchronization of rollup data and enhancing interoperability. When multiple rollups submit their transactions to Radius and these transactions are subsequently ordered within a single cross-rollup block, rollups using the same sequencer achieve atomic composability, albeit with some limitations.

To illustrate this in more detail, let’s have a look at the transaction flow in Radius:

Users dispatch their transactions to the sequencing layer

The sequencing layer arranges the transactions and constructs a block

The composed block is subsequently submitted to the rollup

The rollup proceeds to execute the transactions following the order provided by the sequencing layer

Finally, the rollup submits the executed transactions to the settlement layer DA layer for finalization

Madara

Madara is the sequencer used within the StarkNet L2 framework, which allows for great flexibility in the sense that it can be run in both a centralized and decentralized variant. It deviates slightly from other sequencer models in terms of its technological underpinnings. Built upon the Substrate framework and using the Grandpa and Babe consensus mechanism pioneered by Polkadot, Madara offers support for both iterations of the Cairo VM, facilitated through the integration of StarkWare's Blockifier and in the near future via LambdaClass’s Starknet_in_rust implementation.

The sequencing process of Madara can be tailored to align with specific application requisites. Selections span from a FCFS or PGA, as well as the implementation of consensus mechanisms such as HotStuff. In scenarios where an encrypted mempool is necessitated, Madara's adaptive design accommodates such requirements with ease. Due to this huge flexibility and customizability it’s comparable to a (crucial) infrastructural component for the appchains that integrate Madara. It must be said that Madara can be spinned up pretty easily and customized as one sees fit and therefore be introduced even in L3 or L4 instances.

Conventionally, sequencers abstain from data interpretation; however, a potential integration of Herodotus’s storage proofs introduces the prospect of limited data interpretation. By coupling storage proofs with validity proofs, a comprehensive perspective of a cross chain future can be attained.

Madara, in its current form, serves as the off-the-shelf sequencer solution for StarkNet, but research and development are ongoing processes. An example of this is the StarkNet OS, which is being rewritten in Rust instead of Cairo; you can read more about it here. Another example is the effort to enhance the decentralization of Madara, and, therefore, the team is considering adding an escape hatch; the StarkWare sequencer is currently centralized but Abdelhamid Bakhta said that “They reckon that there are four main points that need to be considered in the design architecture” in this talk and these points are:

Leader election

The underlying L1 could play a role in the leader election process for randomness and the leader election.

The stake that needs to be put off to sequence the transactions of the L2 need to be staked in a smart contract on the underlying L1.

They are considering a pBFT/Tendermint-based consensus mechanism since it offers great liveness guarantees for bounded sequencer sets.

Production of proofs

The proposed solution to implement is a similar structure just like Mina Protocol’s division & designation of labor process.

State updates

A crucial distinction between proof production and L1 state updates lies in the latter's non-computational nature. L1 state updates involve observing the array of proofs generated by the proof production process and subsequently adhering to a distinct protocol to integrate these proofs into the L1 state. This integration process is basically just copying and pasting proofs onto the L1 state. The absence of computation within the L1 state updates not only prevents wasteful computational efforts in case of update failures but also eliminates the relevance of computational capacity, thereby preventing centralization tendencies around the best hardware operators.

Is there an ingenious method to establish an open race protocol for L1 state updates, where the participants who lose the race face minimal losses? One notion involves implementing a commit-reveal scheme, wherein the first participant commits collateral in exchange for a brief period of exclusivity. To counter prolonged DoS attacks, the collateral required could be exponentially increased. Creating an open race for L1 state updates can entail a policy of rejecting proofs that are not submitted by the designated prover, as previously mentioned. However, an intriguing alternative is to limit the distribution of rewards solely to the designated providers, without rejecting the proofs themselves. This approach would enable incentivized parties to execute L1 state updates at their own expense using their individual proofs. It's worth noting that there's always the option to revert to a stake-based leader schedule for L1 state updates. In such a scenario, the benefit of updating at the pace of the first incentivized entity would be forfeited. This is a brief summary of the design that’s currently being explored by Madara and is an active research topic, we definitely recommend reading the whole discussion here.

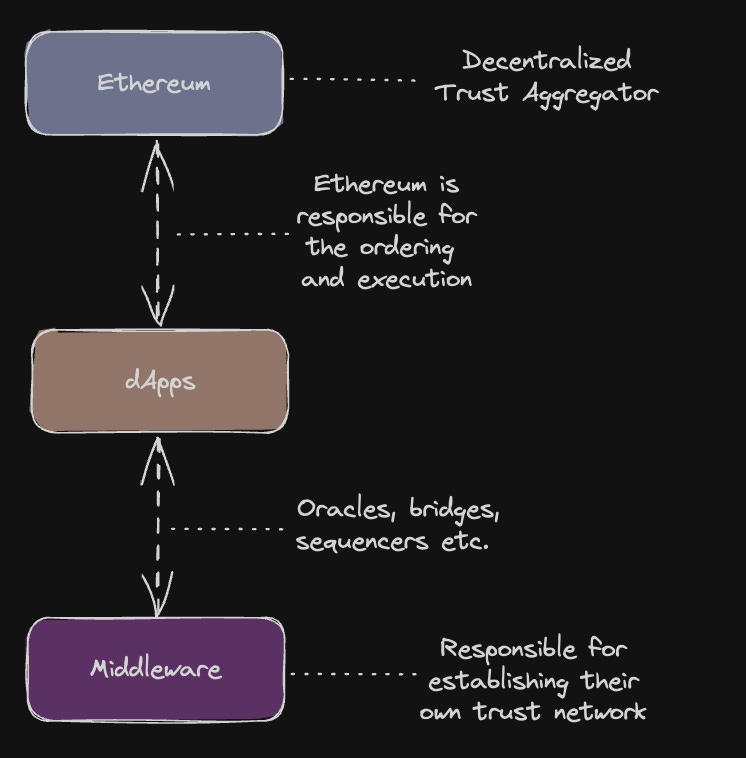

EigenLayer

Another approach that has recently gained attention is the use of middleware services that leverage the existing Ethereum validator set and trust network for security through a restaking mechanism. This approach is being pioneered by EigenLayer.

EigenLayer consists of a series of smart contracts on the Ethereum blockchain that allow users to restake whitelisted Ether liquid staking derivatives. Essentially, it provides a mechanism to utilize the existing trust network for purposes beyond its original intent. Let me provide a few examples to clarify what I mean by this.

When users interact with protocols, such as a DEX on Ethereum, they must place trust not only in Ethereum and the DEX they use but also in several other protocols that the DEX (or potentially the blockchain) interacts with. From a user's perspective, this can mean:

Trusting oracles for price feed

Trusting bridges for cross-chain transfer

Trusting relayers for MEV Boost

Trusting a DA provider (single provider, committee or DA layer) if user is on a Validium/DAC or Celestium

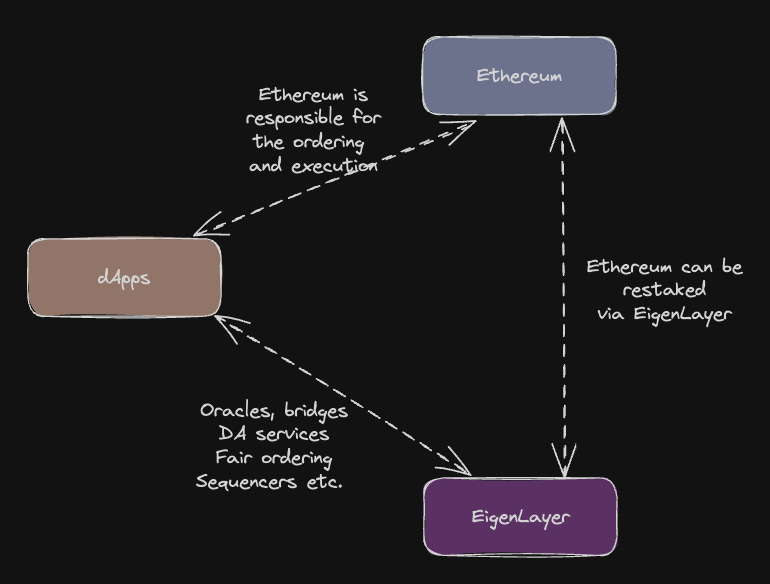

This is where EigenLayer comes in. All the cases mentioned above are examples of users needing to trust a middleware service that is not secured by the Ethereum validator set, thus existing outside the ecosystem's security and potentially introducing a source of risk. So, what if, instead of securing the middleware independently, there was a way to leverage the existing trust network, the Ethereum validator set, to achieve this?

At this point, it might already sound familiar. Let's recall what was mentioned earlier about the core idea behind EigenLayer:

A mechanism to leverage the existing trust network to do stuff it wasn't originally meant to do

That’s exactly what EigenLayer does via a restaking mechanism, EigenLayer allows stakers to expose their staked ETH (stETH, rETH and cbETH) to additional slashing conditions in exchange for securing middleware, thereby leveraging the existing Ethereum validator set for security/trust.

As an alternative to a standalone shared sequencer network, rollups may choose to utilize a middleware solution like EigenLayer for decentralized and potentially shared sequencing. One example of a Rollup-as-a-Service (RaaS) framework that adopts this approach is AltLayer, where re-staking EigenLayer nodes take on the role of sequencing and transaction ordering.

This integration offers dual advantages: first, it promotes more decentralization, and second, it relies on Ethereum's well-established and resilient trust network for security. This aligns with AltLayer's ultimate vision, which involves harnessing the power of re-staking nodes within the EigenLayer network to enhance the decentralization of sequencers that maintain the FlashLayers.

Once this integration is fully realized, users will be able to initiate a Flash Layer using the AltLayer rollup launchpad. During this initiation, they will have the flexibility to determine the number of sequencers required for the rollup's operation. These sequencers are selected from a pool of validators who have voluntarily chosen to support Flash Layers, thanks to EigenLayer's re-staking mechanism. In essence, this intricate system leverages existing assets and structures, combining them in innovative ways to enhance both the security and decentralization of blockchain rollups.

SUAVE

On a high level, SUAVE is an independent network, acting as a shared mempool and decentralized block builder for other blockchain networks. However, SUAVE is not a general-purpose smart contract platform that rivals Ethereum or any other participating chains. On the contrary, SUAVE focuses on unbundling the mempool and block builder role from existing chains, offering a highly specialized plug-and-play alternative. By design, SUAVE is able to support any L1 or L2 network and could potentially serve as a shared sequencer for rollups as well.

However, there are differences here, which can be confusing, especially when SUAVE is referred to as a 'sequencing layer' or a 'decentralized block builder for rollups.' It is important to note that SUAVE is, by design, very different from the shared sequencer designs described earlier in this report.

Let’s consider how SUAVE could interface with Ethereum. SUAVE would not be integrated into the Ethereum protocol, and users would simply send their transactions into its encrypted mempool. The network of SUAVE executors can then produce a block (or partial block) for Ethereum (or any other chain). These blocks would compete against the blocks of traditional centralized Ethereum builders. Ethereum proposers can listen for both and choose which they would like to propose for inclusion in the canonical chain.

Similarly, in the L2 case, SUAVE would not replace the mechanism by which a rollup selects its blocks. However, rollups could potentially implement a PoS consensus that works similarly to consensus on Ethereum L1, where sequencers/validators could subsequently select a block produced for them by SUAVE. Alternatively, they could let a shared sequencer (based on a leader rotation mechanism) choose blocks built by SUAVE.

By design, the shared sequencer designs described in this report should be compatible with PBS, in which users submit transactions to a private mempool, and after that, a trusted third party (TTP) assembles a bundle of transactions, obtaining a blind commitment from a sequencer node (i.e. miner/validator) to propose the block.

Decentralized block-building designs like SUAVE aim to decentralize this TTP. These designs carry great promise to mitigate the impact of MEV on users, especially when coupled with a decentralized sequencing layer. Therefore, shared sequencers are an essential and complementary component required to combat the negative externalities of MEV on the blockchain ecosystem in conjunction with decentralized block-building services like SUAVE.

SUAVE also holds great potential for improving security guarantees in the context of cross-chain MEV and cross-rollup atomicity. Certainly, the question of how SUAVE might collaborate with rollup sequencers in practice as they decentralize, and potentially with Shared Sequencers, is quite interesting.

For example, Espresso seems confident that SUAVE aligns with their ESQ solution and can operate as builders. In this context, the traditional 'Shared Proposer' is analogous to the 'Shared Sequencer,' while 'Shared Builder' translates to 'SUAVE.' In this system, a builder (like SUAVE) can obtain a blind commitment from the sequencer (acting as the proposer) to propose a specified block, with the sequencer only being aware of the total utility or bid they gain from that proposal, remaining oblivious to its contents.

Revisiting the scenario of a searcher aiming for cross-chain arbitrage: Using SUAVE, one could construct and dispatch two distinct blocks:

Block 1 (B1) that houses Trade 1 (T1) - purchasing ETH inexpensively on Rollup 1 (R1).

Block 2 (B2) embedding Trade 2 (T2) - selling ETH at a premium on Rollup 2 (R2).

There’s a conceivable scenario where B1 triumphs in its auction while B2 doesn't, or the reverse. Interestingly, the dynamics shift if both rollups opt for a shared sequencer. Given that shared sequencer nodes lack a deep understanding of transactions, they lean on entities like SUAVE or other MEV-aware builders to form a complete block efficiently. In such an environment, SUAVE executors can offer both B1 and B2 to the shared sequencer with a stipulation that the blocks should either be executed together or dismissed altogether. This setup ensures:

SUAVE (Shared Builder) guarantees the resultant state if B1 and B2 are collaboratively executed.

Shared sequencer (Shared proposer) assures the joint and coordinated execution of B1 and B2.

Moreover, SUAVE offers a unique capability of defining preferences for cross-domain transactions. For illustration, a user indicating to SUAVE their desire to have two trades implemented at a specific block height for arbitrage:

Trade 1 (T_1) - Buying ETH at $2,000 on Rollup 1 (R_1) in Block 1 (B_1).

Trade 2 (T_2) - Selling ETH at $2,100 on Rollup 2 (R_2) in Block 2 (B_2).

Considering the rollups might operate at varying block times (R_1 at 1 second and R_2 at 10 seconds), it's feasible for T_1 to finalize its arbitrage section, only for T_2 to falter.

SUAVE’s strength lies in its non-prescriptive approach, focusing on flexibility to ensure coordination across any domain or user. Every domain presents distinct challenges, and users have varying expectations and risk appetites. If a user insists on the execution of both trade legs, the executor assumes the risk of one leg failing. This could mean using their capital for both trades, with reimbursement from the user's locked capital only upon the successful execution of both trades. Failure implies that the executor bears the risk. Such a system necessitates adept executors who can take price execution risks for the given statistical arbitrage. Consequently, fewer executors might participate due to a lack of capital or risk aversion. However, SUAVE is equipped to accommodate any path, allowing users and executors to engage based on their individual risk thresholds. While SUAVE might not offer 'technical cross-domain atomicity,' it effectively delivers 'economic cross-domain atomicity' from the user's viewpoint, albeit with potential risk for the executor.

Financial incentives of sequencing

Speaking about economic alignment, in a modular architecture, various individual components must generate revenue to ensure long-term sustainability. This applies to the sequencer as well, which benefits from clear revenue generation mechanisms.

The primary revenue source involves charging a fee to the rollup users, or the rollups they interact with, when they utilize the sequencer. This fee can take two different forms: a flat fee, for example, 0.1 gwei (similar to the Arbitrum way), or a variable percentage dependent on the fee charged by the rollup to the user. It could even be argued that sequencer fees could be relatively higher than the competition, given the underlying sequencer layer achieves a decent network effect.

While Sreeram’s point is valid, we would argue that there's a more nuanced perspective to consider. A rollup still has the option to utilize a shared sequencing layer, even if it results in higher costs for the designated rollup than its competitor. This hypothesis assumes that sequencers can establish network effects and are not easily forked, especially when active or high-value rollups are integrating their infrastructure, granting them a competitive advantage over time. When a sequencer attracts multiple rollups built on its infrastructure, it creates an additional source of income: MEV.

It's important to note that engaging in toxic MEV is not desirable for end-users and can harm the competitiveness of the sequencer in the long term. Remember that it is relatively easy to fork away and replace the harmful sequencer with a more beneficial sequencer. This doesn’t means that here are legitimate ways to generate revenue from MEV such as:

Arbitrage: Sequencers can prioritize transactions that benefit them or their partners, such as taking advantage of price differences between decentralized exchanges or carrying out liquidations.

MEV Auctions: Some blockchains have introduced mechanisms like order flow auctions, where sequencers can bid for the right to include specific transactions in their batches, with the highest bidder securing these transactions. This transparent approach allows sequencers to earn MEV revenue without hurting end users.

Estimating the exact revenue can be challenging, as it depends on variables like the number of rollups integrated with the sequencer and the intra domain transaction volume. Another potential source of MEV revenue is inter-domain MEV. Sequencers that attract multiple rollups facilitating atomic communication create opportunities for MEV, leading to an additional revenue stream. However, it raises questions about who should benefit from this new revenue source: the corresponding rollups, the users, or the sequencer itself. One possible solution is to distribute this MEV equally among all stakeholders involved; however this remains an intriguing topic that requires further exploration as well as actual experimentation and implementation.

Sequencing proposals

The future of sequencing in blockchain holds immense potential for transformative advancements. As the technology continues to mature, we can expect to see even more decentralized, efficient, and secure sequencing solutions emerge. In this chapter we will cover some of these novel designs.

Distributed Validator Technology

Distributed Validator Technology (DVT) is an innovative open-source mechanism that decentralizes the role of a validator by permitting multiple network validators, managed by various entities, to collaboratively function as a singular validator. The notable implementation by Obol Network distributes a validator's private key among its Distributed Validator (DV) 'cluster.' As each validator in the cluster becomes active, they sign attestations using their portion of the shared key. These attestations are then amalgamated to validate as a complete validator node. For a block proposal to succeed, every member of the DV cluster needs to sign identical data. The cluster has an inherent coordination layer to ensure consensus before proposing any block. Obol's DVT is fortified by a GoLang-based middleware named Charon, which streamlines validator coordination within its cluster. This framework ensures Byzantine fault tolerance using QBFT consensus and can still propose blocks assuming a supermajority of the nodes are honest and functional.

The implementation by Obol eliminates the risk associated with a singular point of failure for validators. As long as the majority of the DV cluster operates honestly and remains online, block production continues seamlessly. Embracing DVT ensures not only enhanced geographical dispersion (thereby mitigating risks related to regulatory crackdowns and systemic issues like power outages) but also empowers validators with the capability to run multiple consensus and execution clients. This subsequently enhances the robustness of the validator set. Additionally, DVT democratizes entry into the validator space, as sub-validators can collaboratively meet the financial prerequisites. In essence, Obol’s framework offers a strategic solution to technical, geographic, and financial challenges plaguing the validation of Proof-of-Stake networks, further enhancing uptime and decentralization.

The DST Proposal

A mechanism proposed by Figment called Distributed Sequencer Technology (DST) builds upon the foundation set by DVT to decentralize rollups. Instead of a singular sequencer machine, DST assigns the responsibility to a cluster, the 'DS Cluster.' This not only ensures fault tolerance, the potential for diverse clients, and geographic spread but also safeguards against the risks of malicious behaviors, outages, and other systematic threats. DST's ability to distribute sequencer clients across its cluster guarantees L2 rollup functionality, even when individual components falter. It offers a vital mechanism that ensures network liveness, a prerequisite for providing a swift, efficient, and reliable user experience. DST's redundancy ensures that the sequencer remains operational, allowing a supermajority to handle transactions, regardless of the availability of any individual sequencer in the cluster.

For Ethereum's Layer 2s, achieving a sufficient degree of decentralization remains a n important objective. DST provides a way for progressive decentralization, simplifying the shift towards rollup decentralization. Progressive decentralization entails the distribution of block creation and production across multiple sub-sequencers within a DS cluster, leading to reduced risks inherent in single sequencer setups.

DST's contribution to L2 solutions also emphasizes the importance of geographical distribution. Just as Ethereum validators employing DVT might decide to operate globally, DST sequencers could harness the advantages of worldwide distributed clients. While this may introduce latency, the strategic use of geographical distribution can optimize system liveness and robustness.

The consensus mechanism in Obol’s DVT framework mirrors the attributes of Proof of Authority (PoA). PoA entrusts a select group of nodes with the validation and addition of transactions to the blockchain. Because validator identities are transparent, any malicious activity can be held accountable. However, PoA does present centralization risks due to the enhanced control the permissioned validators have over the network. DST, in many ways, inherits the traits of PoA, introducing steps toward sequencer decentralization, elevating operator accountability, enhancing network reliability, and bolstering resistance to censorship.

Even for centralized rollup designs, DST offers tangible benefits. It caters to the critical need for liveness (uninterrupted service), which a single sequencer cannot guarantee. DST provides centralized rollups with a method to maintain sequencing control while ensuring consistent availability. This becomes especially crucial for rollups focused on applications like gaming, which demand low latency and high reliability.

Shared Validity Sequencing

Shared Validity Sequencing represents a pioneering sequencer design that enables atomic cross-chain interoperability between optimistic rollups. At the core of this design are three essential components:

A mechanism that allows the shared sequencer to process cross-chain actions.

An advanced block-building algorithm tailored for the shared sequencer to process these actions, ensuring atomicity and conditional execution guarantees.

Implementation of shared fraud proofs among the participating rollups to enhance the integrity of cross-chain actions.

To illustrate this concept, Umbra presents a system that facilitates atomic burn and mint actions across two rollups: the success of a burn action on rollup A depends on the corresponding success of a mint action on rollup B. This framework can be extended to support arbitrary cross-rollup message passing.

Block Building in Shared Validity Sequencing

Under this design framework, rollups A and B operate under a shared sequencer that is responsible for posting transaction batches and proposed state roots to the L1 for both rollups. This sequencer could be either centralized, like contemporary rollup sequencers but for multiple rollups, or a decentralized shared sequencer with a leader election mechanism or voting-based consensus algorithm. In any case, this setup allows for the posting of transaction batches and proposed state roots for both rollups to the L1 within a single transaction.

Upon receiving transactions, the shared sequencer constructs blocks for rollups A and B. Transaction execution on rollup A triggers the sequencer to verify its interaction with the MintBurnSystemContract. The successful execution of the burn function interaction prompts the shared sequencer to initiate a corresponding mint transaction on rollup B. Depending on the success or failure of this mint transaction, subsequent actions are determined, preserving the essence of atomicity.

Properties and Implications of Shared Validity Sequencing

Rollups enabled with atomic cross-rollup transactions through Shared Validity Sequencing can be thought of as a monolithic chain with multiple shards. Compared to a monolithic rollup, this approach offers several advantages, including local fee markets, sophisticated pricing mechanisms, and support for diverse execution environments, making it ideal for app-specific rollup use cases.

In contrast to shared sequencer designs that solely focus on transaction ordering, Shared Validity Sequencing places additional demands on the sequencer, particularly in terms of transaction execution. Furthermore, full node operators for one rollup must also operate nodes for the other one due to the interdependent validity of their states.

Shared Validity Sequencing inherently links the validity of participating rollups due to their shared composability. This design choice impacts the sovereignty of applications, positioning them between monolithic chains and app-chains in terms of autonomy.

ZK Rollup compatibility

While zk-rollups inherently support asynchronous interoperability, atomic interoperability provides enhanced user experiences and enables synchronous composability. Although Shared Validity Sequencing is primarily designed for optimistic rollups, it can be adjusted to accommodate zk-rollups with modifications to the MintBurnSystemContract and block-building process. Due to the simplified state verification in the zk-rollup framework, node operators for rollup A are not required to run nodes for rollup B, simplifying the verification process.

Based Sequencing

An interesting approach to decentralize sequencing in a tokenless manner was proposed by Ethereum researcher Justin Drake and is generally referred to as based rollups. These are also known as L1-squenced rollups and imply that the rollup network's sequencing (transaction ordering) is done on the same L1 network on which the rollup is based, typically Ethereum.

Specifically, for Ethereum, it means that all the searchers, builders & proposers in the network partake in the rollup network's sequencing, decentralizing the process. But let's have a look how blocks are built on Ethereum.

Most Ethereum network blocks are currently forged using a middleware named MEV-Boost. In the future, this procedure will be inherent to the Ethereum protocol through enshrined PBS.

Searchers initially scan the mempool for MEV opportunities, bundle these, and submit them to the builders, who create comprehensive blocks to maximize MEV revenue. These blocks are then handed over to proposers and added to the Ethereum blockchain. The process entails searchers and builders bidding nearly as much MEV revenue as they can generate to have their bundles and transactions selected, resulting in a flow of MEV revenue from searchers → builders → proposers.

By democratizing MEV extraction through PBS (also giving solo validators a chance to participate in the MEV game) it will likely become a race to the bottom, ultimately minimizing MEV extraction as much as possible. However, MEV won't be gone entirely.

Based rollups have several notable benefits compared to traditional rollup networks that manage their sequencing.

Resilience & decentralization:

They rely on Ethereum for sequencing transactions, thereby capitalizing on Ethereum's robustness and decentralized validator set. This approach negates the risk of sequencer or validator failure unless the Ethereum network itself faces an issue.

Simplicity & no token requirement:

Based Rollups are simpler and don't require tokens for sequencing, as this process is handled by the Ethereum network. However, based rollups have certain limitations too:

Revenue flow:

The rollup network's MEV revenue flows into Ethereum L1, without accumulating value on Layer 2 (L2).

User experience:

Relying on Ethereum's finality can be unfriendly from a user experience perspective. However, researchers are currently exploring ways to impose faster finality in advance through inclusion lists, builder bonds and more.

Economic alignment:

When an MEV opportunity is spotted in based rollups, Ethereum network searchers and builders submit corresponding bids, allowing the revenue from based rollups’ MEV to flow naturally into Ethereum L1.

Outlook

In conclusion, the landscape of blockchain sequencers is set for an exciting and transformative journey. As the blockchain ecosystem evolves, sequencers are poised to undergo significant changes, shifting from centralized designs to more decentralized, efficient, and adaptable solutions. These advancements in sequencing technology are pivotal in enhancing transaction efficiency, scalability, and security for rollups.

Moreover, the introduction of innovative revenue models, such as MEV capture and decentralized governance, promises to bring novel economic incentives to the world of sequencers. While the field is theoretical at present, with various teams working diligently to roll out sequencing solutions, it's important to acknowledge that no production-ready product exists yet. However, progress is being made here with Astria being one of the first to launch their shared sequencer dubbed as ‘Dusknet’, another one that will be following suit is Radius, so expect their announcement soon!

As the industry continues to mature, many unknowns and challenges lie ahead, and the competitive landscape will likely evolve rapidly. Network effects and interoperability will play a crucial role in determining which approaches gain widespread adoption. With these exciting developments on the horizon, the future of sequencers holds great promise in shaping the growth and sustainability of rollups in the blockchain space.

Also, since we’ve initially started working on this article many updates and design proposals have happened. Due to the length of the article and the timing of their releases we haven’t had them covered in this article but we still want to bring them under the attention. A while ago Aztec released a RFP in which they asked anyone to come up with sequencer designs. In total 8 designs were deemed interesting which can be found in this post but only 2 have been selected for further research which are Fernet and B52. Metis, another L2 working on its own sequencer, came up with a design they dubbed ‘ decentralized sequencer pool' which is part of their goal to become a fully decentralized and functional rollup. That the modular ecosystem is taking over can arguably be demonstrated with Rome Protocol which is building a Solana-based shared sequencer.

With regards to shared sequencing, the increasingly maturing ecosystems of modular building blocks and development frameworks will surely be a powerful catalyst. With many builders already opting for (app-specific) rollup implementations rather than standalone L1s or smart contract based applications, a clear trend towards app-specific roll ups seems to be emerging. The traction tech stacks like the OP Stack or Polygon’s CDK have recently been gaining is impressive evidence to this. Likely, the trend will be further amplified through Rollup-as-a-Service (RaaS) providers such as Initia and Vistara that will provide fully customizable development frameworks with one-click deployment, abstracting all the complexity that normally comes with building out a rollup from scratch.

Since many of these RaaS frameworks are geared towards maximum flexibility, they will likely all integrate multiple shared sequencing layers that builders can choose from. This makes a lot of sense as we see the Decentralization-as-a-Service that shared sequencers provide as primarily interesting for the long-tail of rollups, which can include all sorts of rollup execution layers, probably often optimized for specific use cases. These might struggle to build out a secure and decentralized sequencing mechanism proprietarily. Additionally, the composability benefits that shared sequencers introduce are likely especially valuable to these kinds of app-specific, otherwise isolated, execution layers.

We expect most of the larger general purpose rollups (or rather ecosystems) to build out their own sequencer decentralization mechanisms, to keep sequencing and potentially the revenue flows under their control. However, while Polygon, zkSync and Optimism have all made announcements hinting at exactly this, Arbitrum seems to be the exception that proves the rule, with OffChain Labs recently announcing a partnership with Espresso Systems.

Many thanks to my good friend Zerokn0wledge and co-founder of Redacted Research for co-authoring this article with me! We’d like to thank Tariz (Radius), Dino Eggs (Fluent Labs), NDW (Castle Capital), Lana Ives (Madara) Apoorv Sadana (Madara), Eshita Nandini (Astria) & Josh Bowen (Astria) for reviewing this article.

Disclaimer: Nothing in this article is financial advice and solely serves educational purposes. The author(s) may have vested interests in some of the projects that are mentioned in this article.